Developing visual conventions for explainable LLM outputs in intelligence analysis summaries.

Project OverviewThis project considered how new visual conventions might effectively communicate uncertainty in LLM-generated summaries to intelligence analysts to enhance trust calibration and support informed decision-making. It was completed in partnership with LAS, a mission-oriented academic-industry-government research collaboration.

My ResponsibilitiesUser research, concept development, and interface design and prototyping.

Problem StatementIntelligence analysts increasingly rely on partially LLM-generated summaries to process complex data, but the lack of visual conventions for representing confidence and uncertainty limits their ability to validate outputs and make informed decisions. Our research investigated potential innovations in visualization and interface design to support more efficient and reliable human-machine teaming.

Key OutcomesVisual Conventions for Uncertainty

We iterated upon and developed a range of new visual conventions for conveying uncertainty, applying emergent criteria to determine a final set of most effective visualizations.

- Experiential: Conveys uncertainty intuitively at a glance.

- Reflective: Encourages deeper understanding upon inspection.

- Legible: Maintains clarity in the appropriate context.

- Implementable: Compatible with existing display technologies.

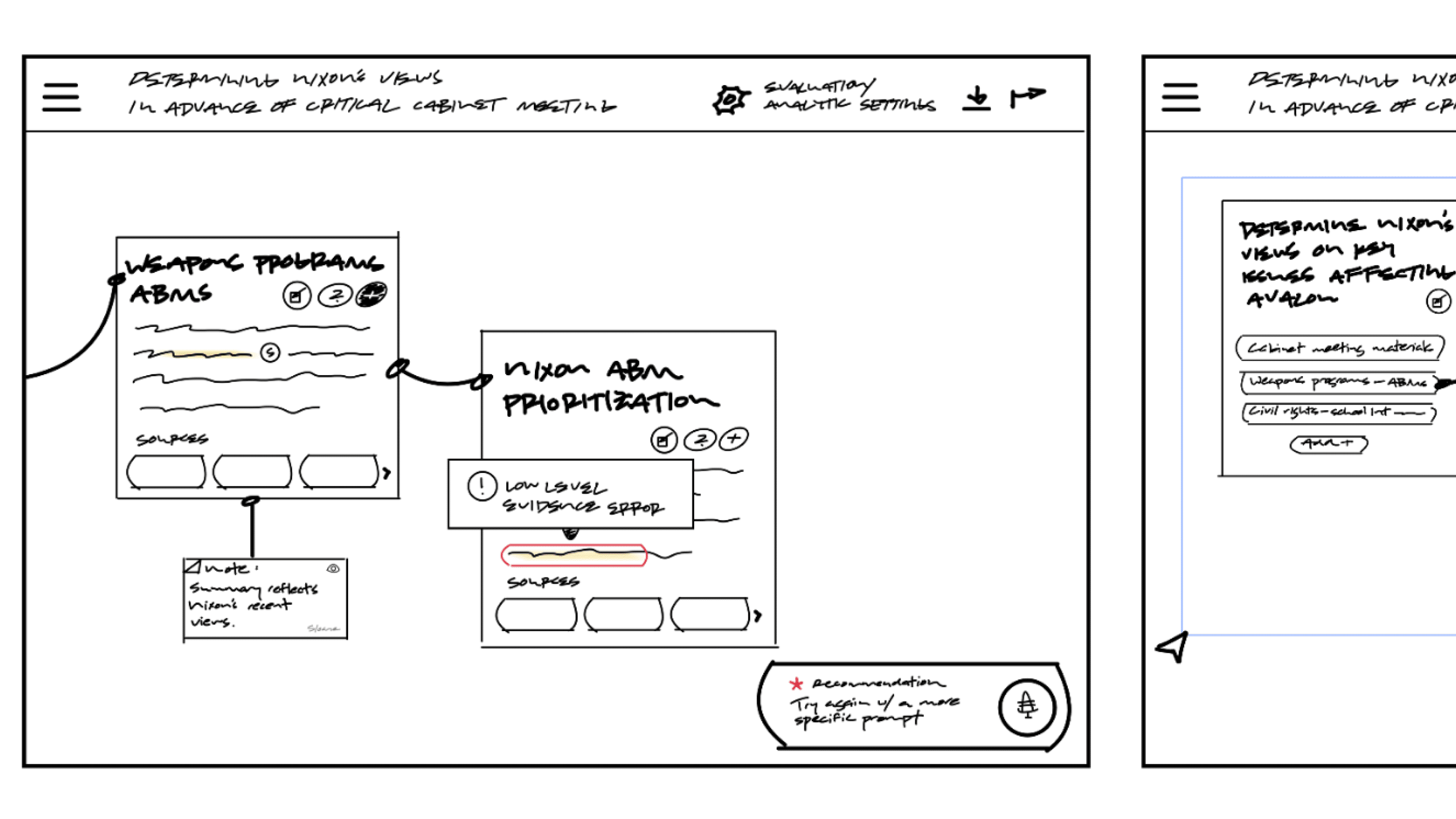

Speculative InterfacesWe designed three speculative interfaces to envision how AI could support an analyst’s investigation of uncertainty in the future.

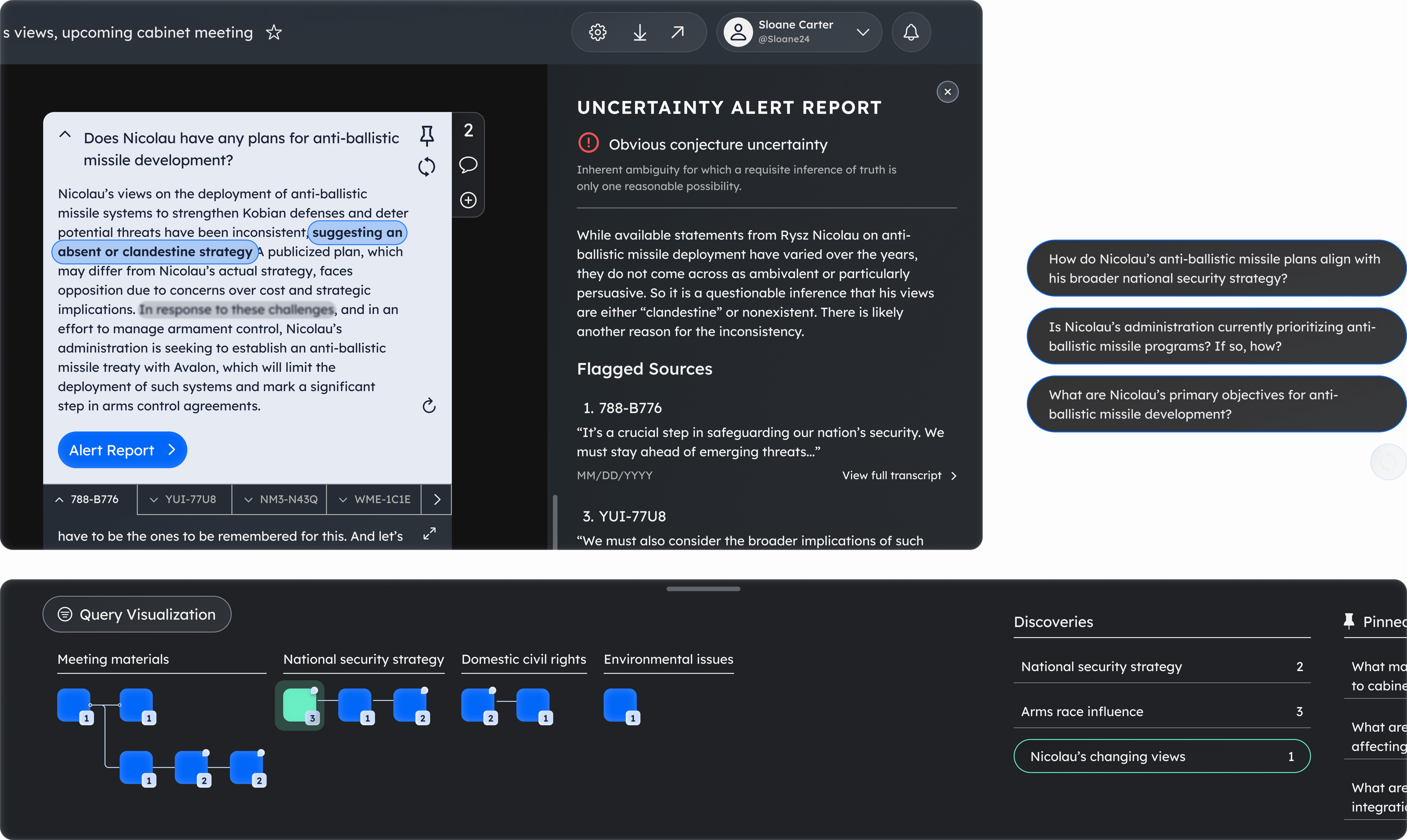

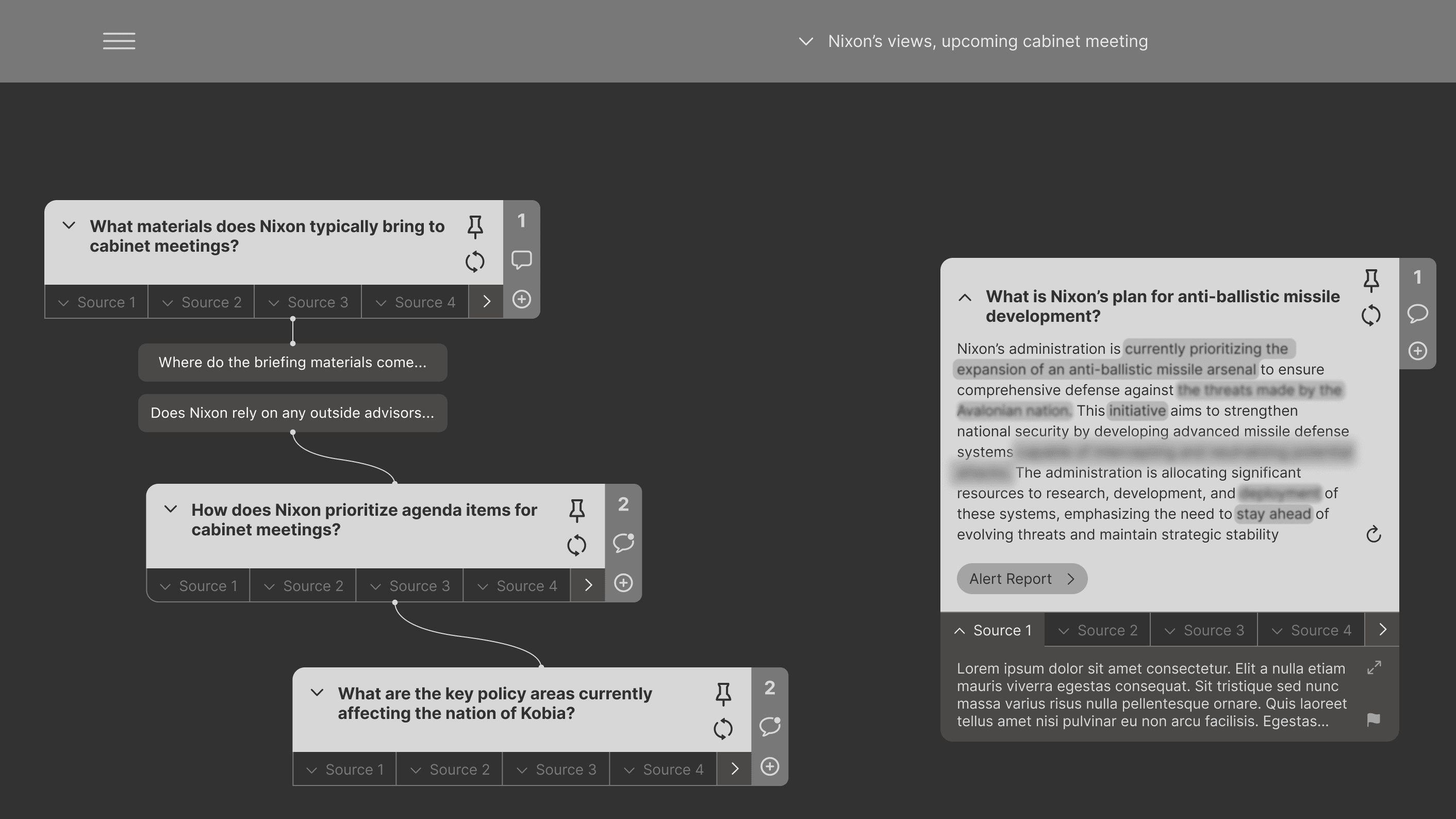

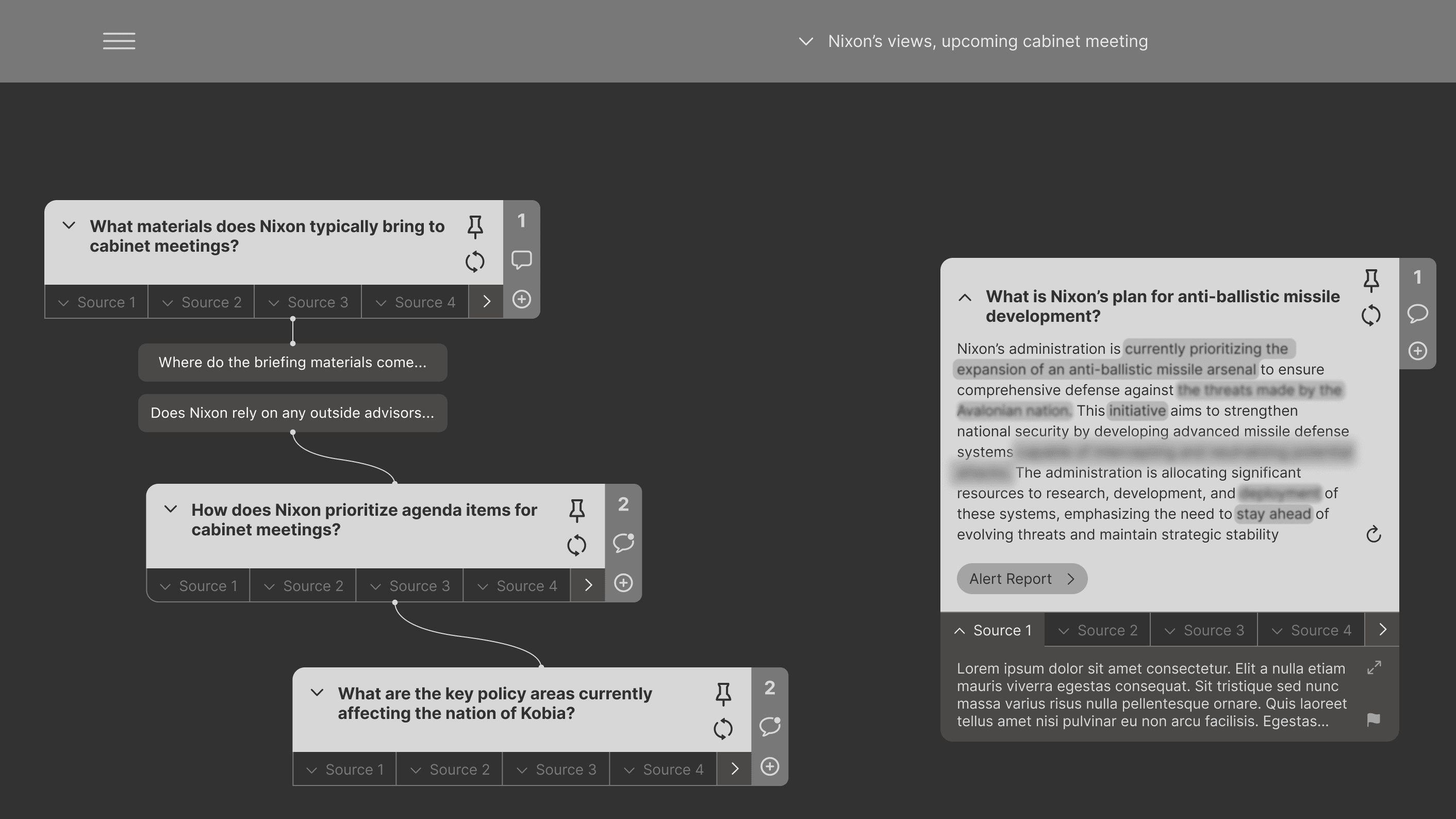

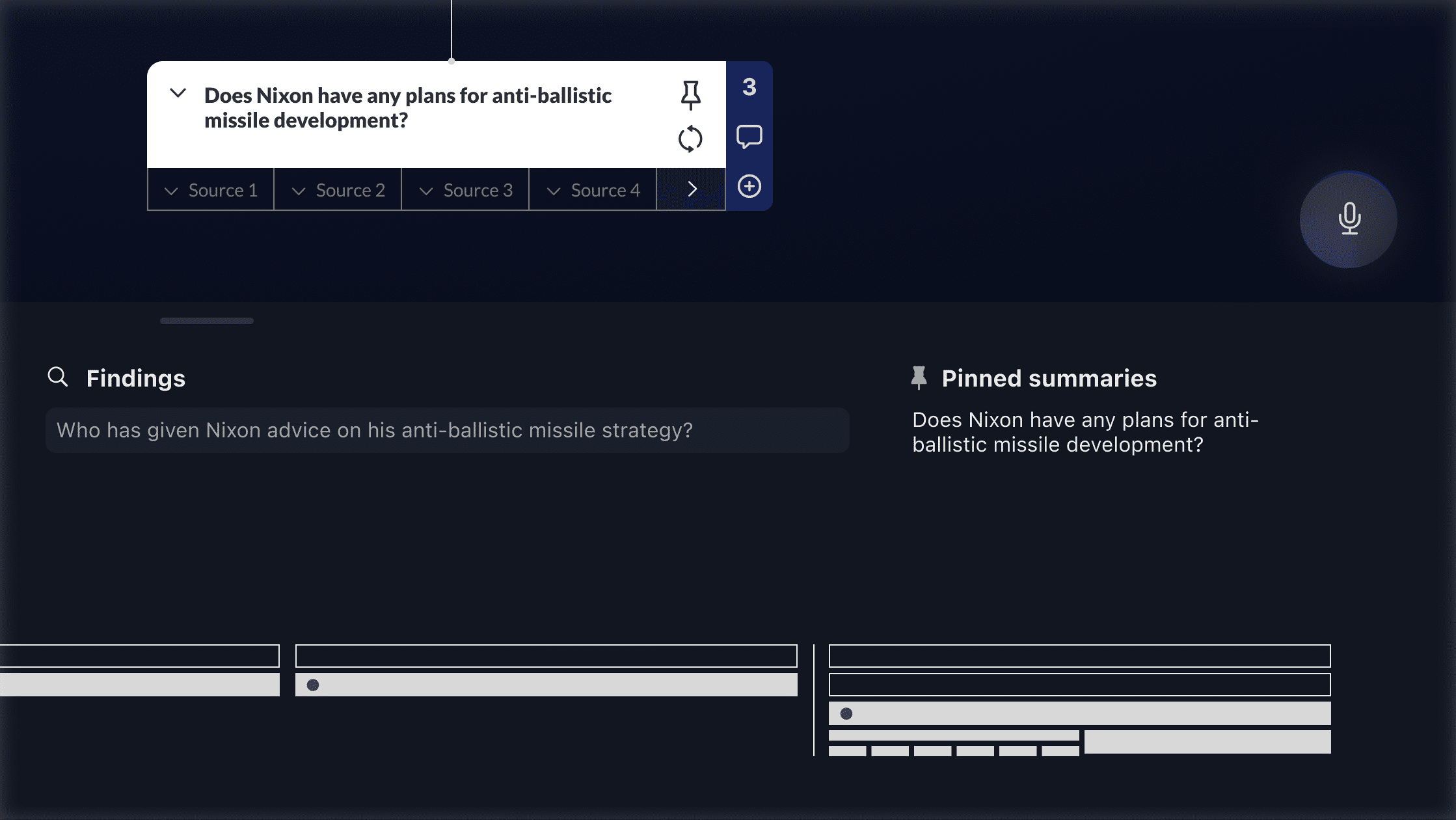

Concept 1: History ApertureThis concept dynamically reconfigures UI elements to guide the user’s attention, creating a visual language for their analytic process and query history. The design utilizes query recommendations, nudging, verification, and source investigation via a conversational interface to calibrate trust between agents and users.

Uncertainty Verification and Source ChecksThe interface reassures the user by offering context, clarifications, and guidance, fostering confidence in results.

Expanding the Query ProcessThe interface provides recommendations and nudges, helping the user revisit searches and uncover key insights more effectively.

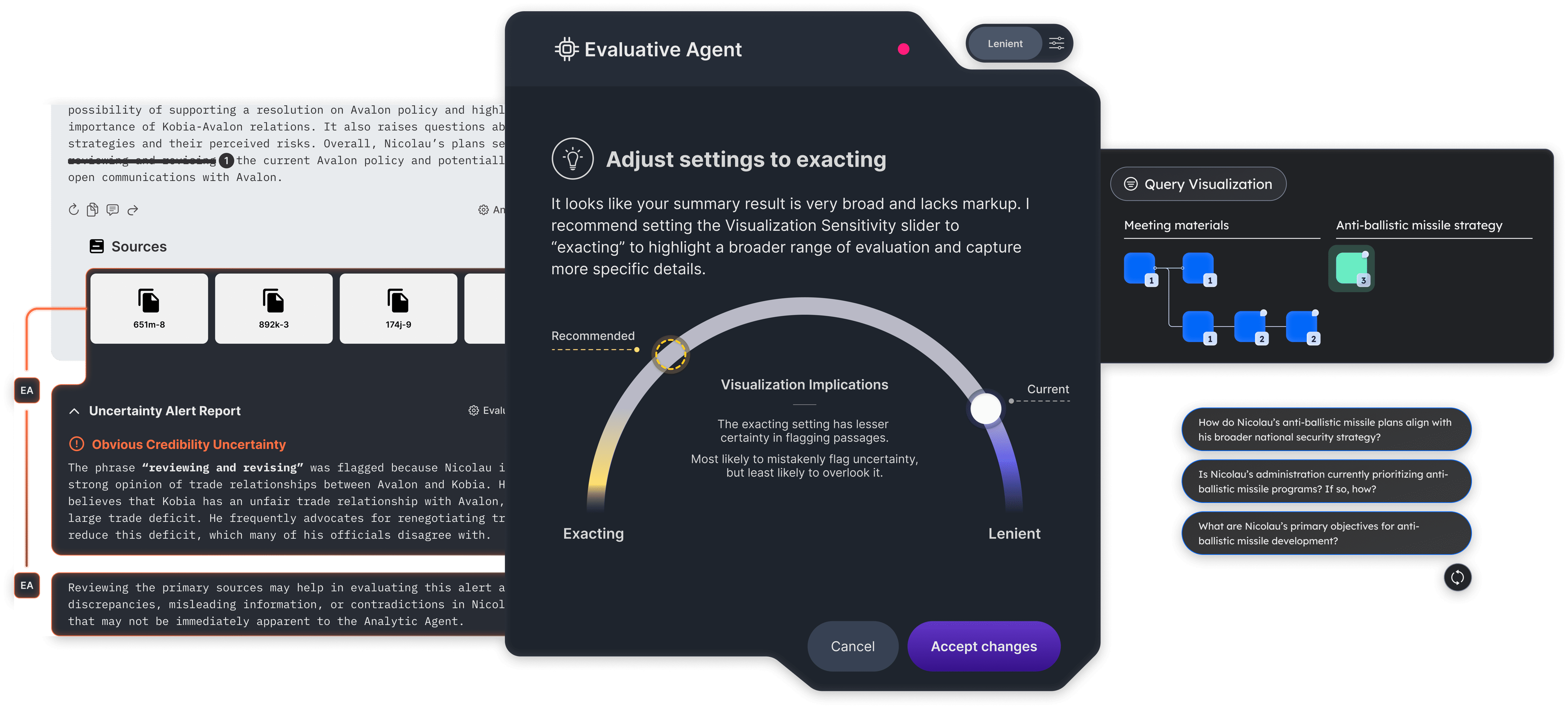

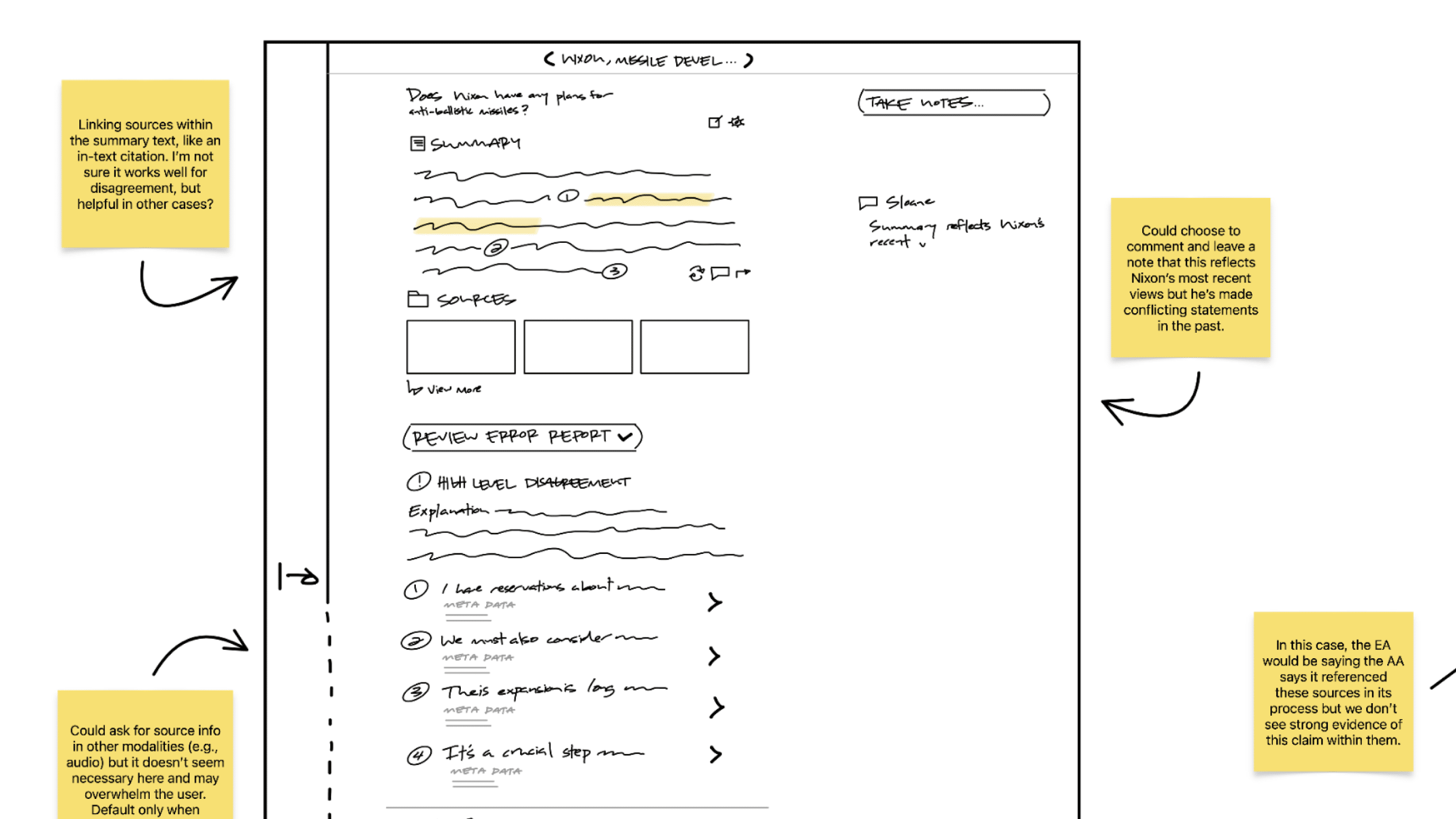

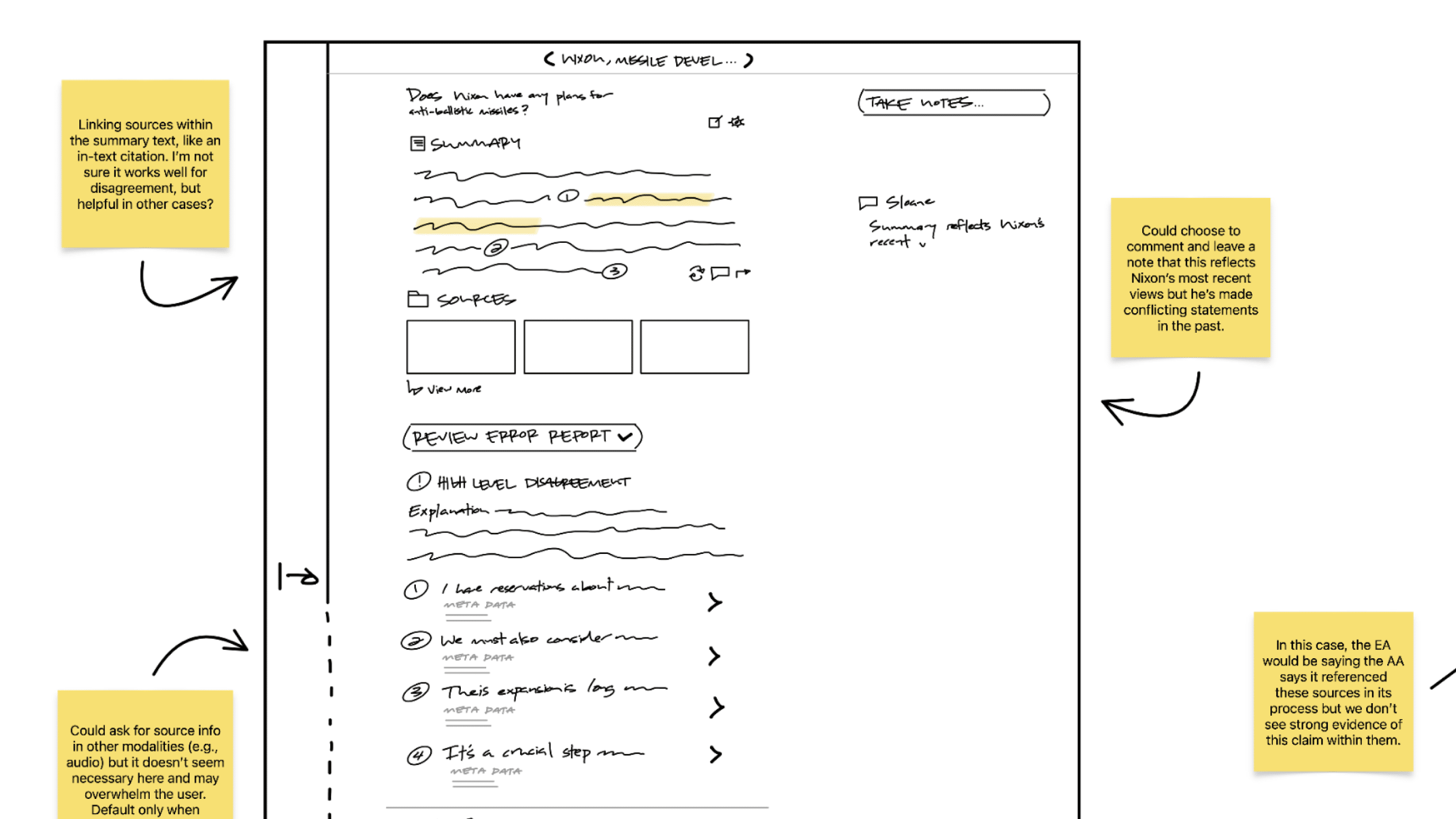

Concept 2: Agent DialogThis concept explores multi-agent dialog as a method for trust calibration. The analyst interacts with two distinct agents — an Analytic and Evaluative Agent. The design leverages the analyst’s concept of expertise to build a clear mental model of AI capabilities and limitations.

Progressive DisclosureThe interface reduces the user’s cognitive load by progressively disclosing information in manageable increments.

Predicting User NeedsThe interface anticipates user needs by adapting to interactions and recommending settings as their tasks evolve.

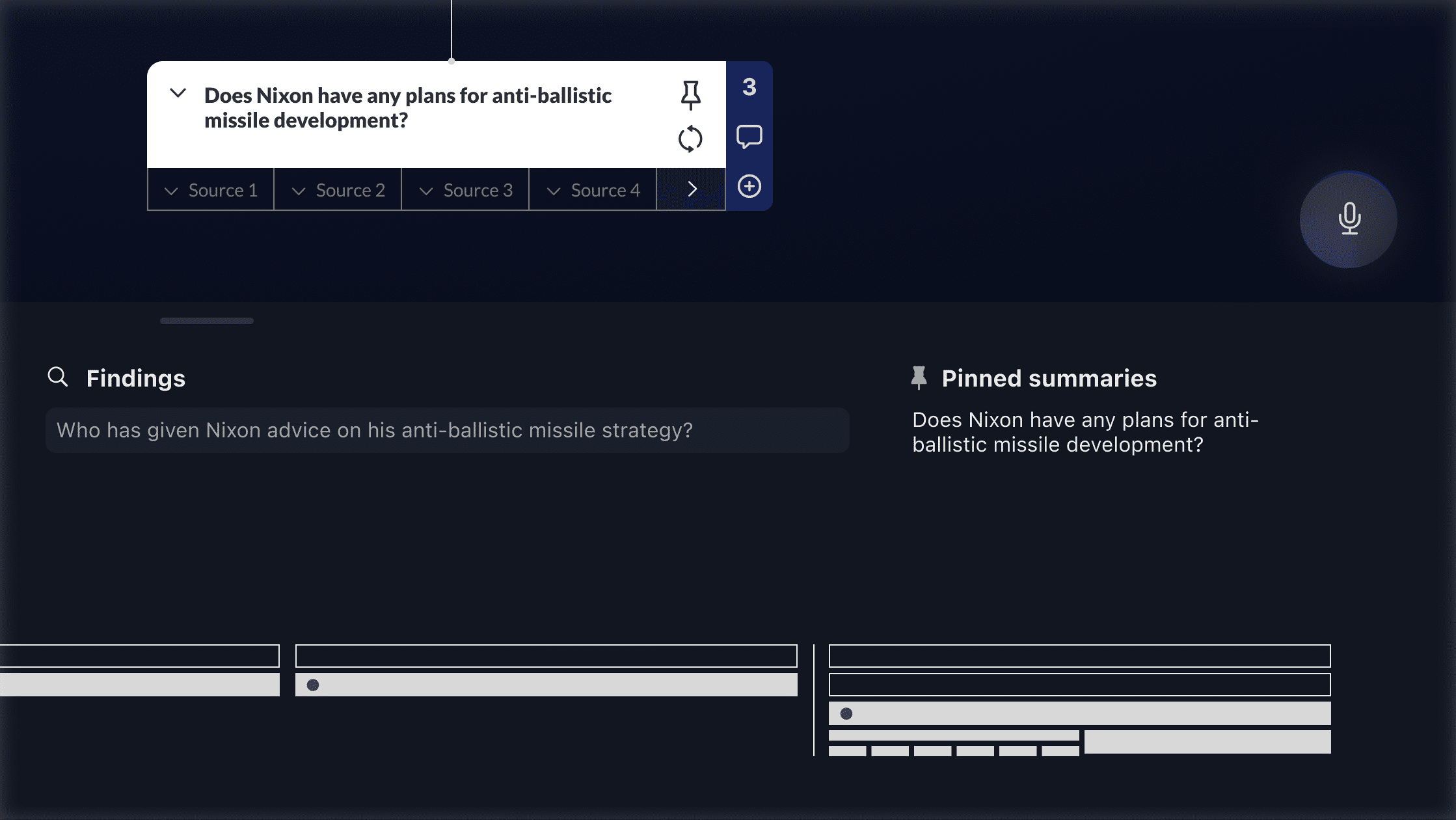

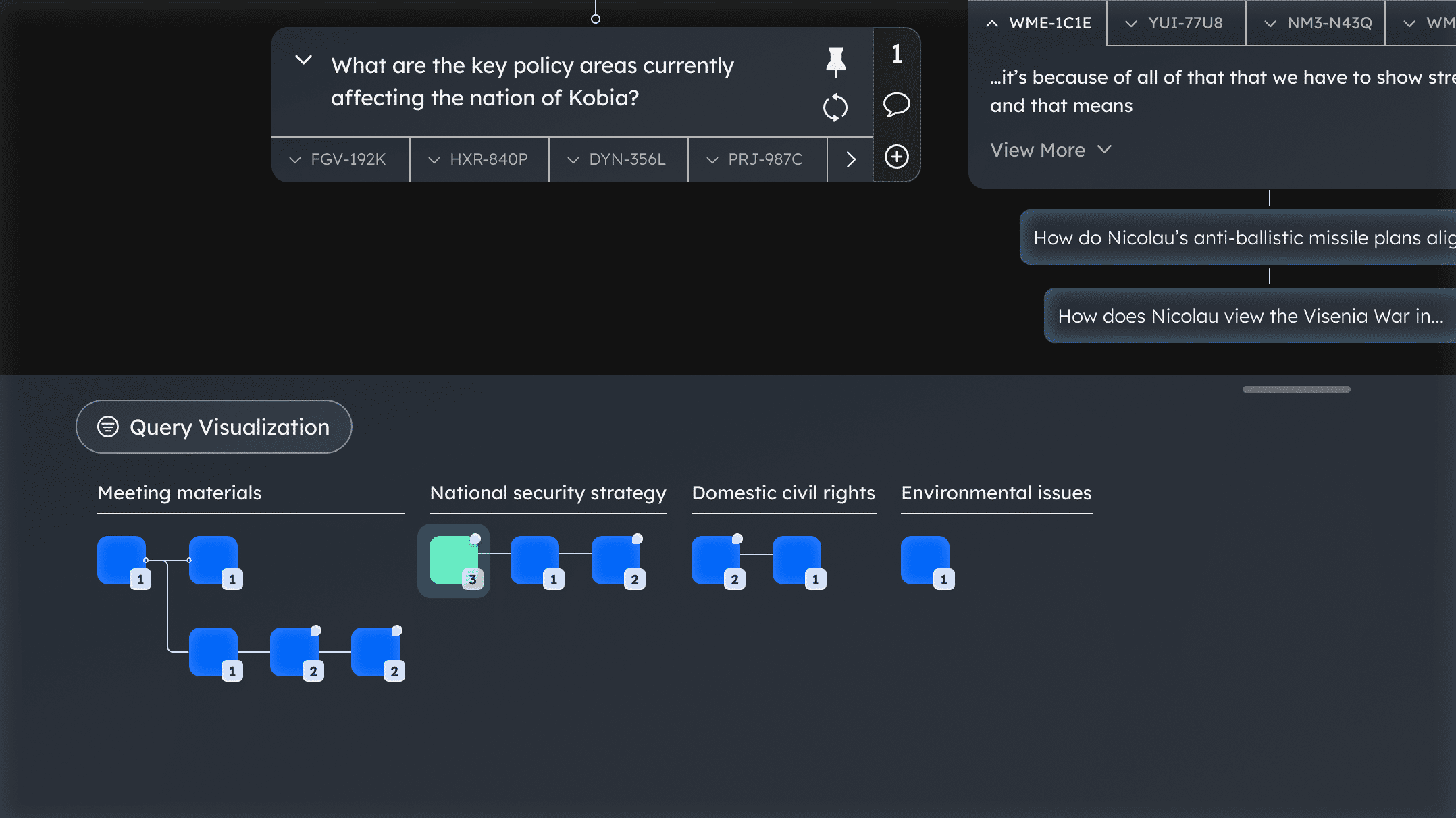

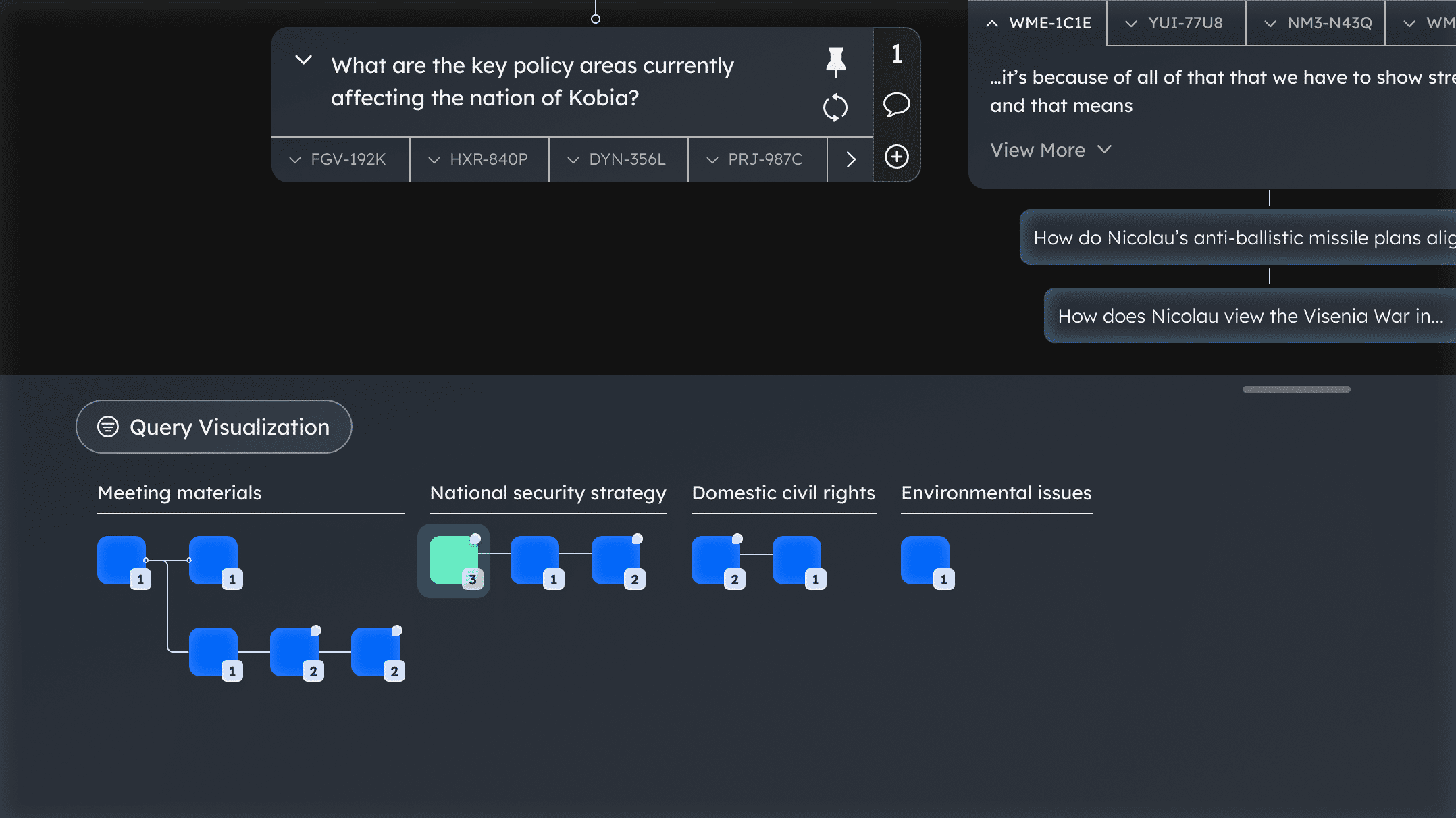

Concept 3: Nested ChatThis concept considers how context might be used to support appropriate trust by adjusting parameters according to whether the storyline is exploratory, mission critical, or crisis-driven, and communicates these optimizations to the analyst to foster trust. The design leverages nested-UI elements to visually represent continual validation between user inputs and agent outputs.

Personalized Query MemoryThe interface remembers a specific user’s past queries, reports, and parameter settings to tailor recommendations for specific customers and storylines.

Responding to ContextThe GenUI interface adapts to support analyst workflow in changing contexts.

ProcessPart 1: Visual ConventionsUnderstanding the Problem and Evaluating user Needs

We began by mapping how an analyst would interact with an LLM as part of their workflow, focusing on where uncertainty arises within this process. In exploratory sessions with the LAS team, we distinguished the difference between the uncertainty inherent in intelligence traffic and the uncertainty within an LLM-generated summary.

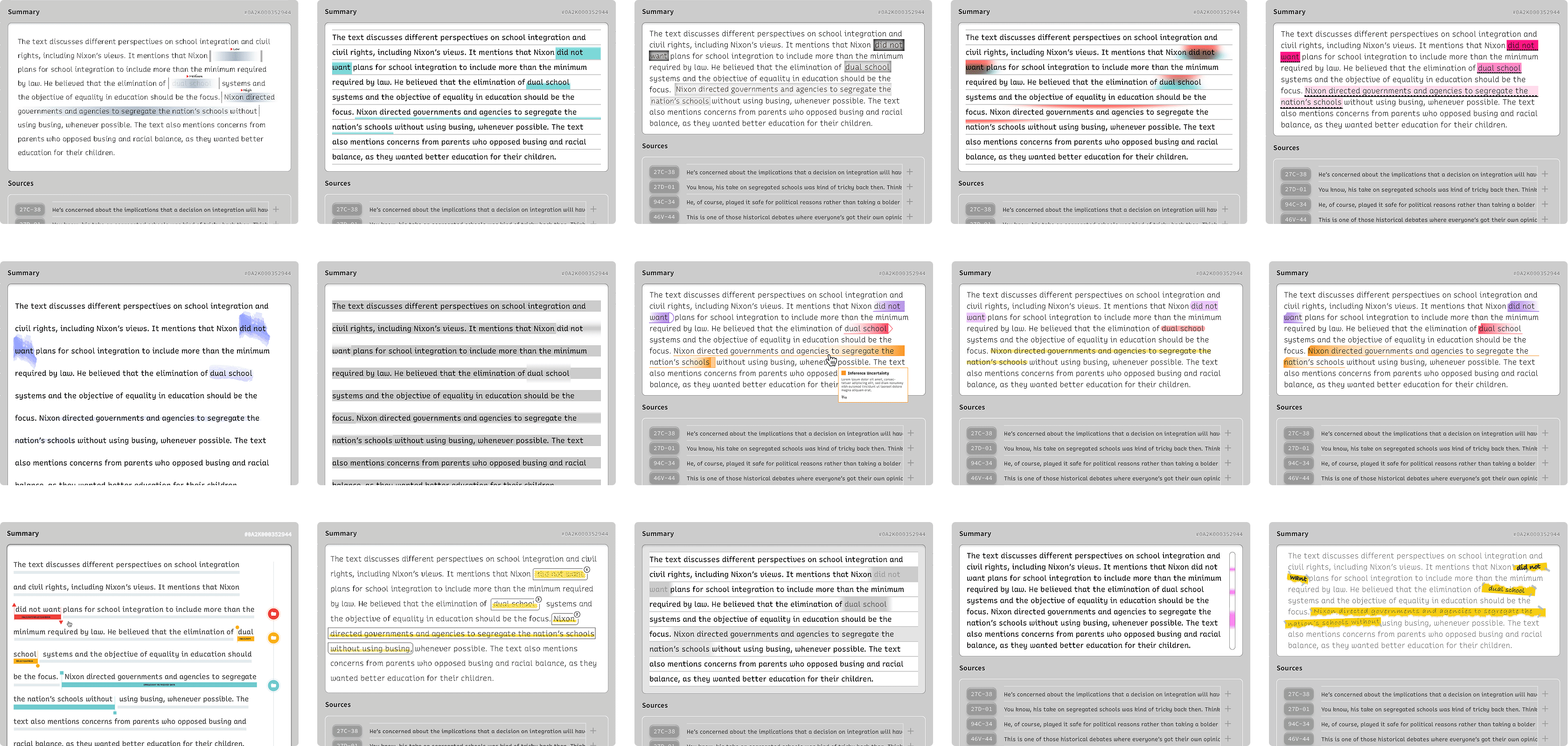

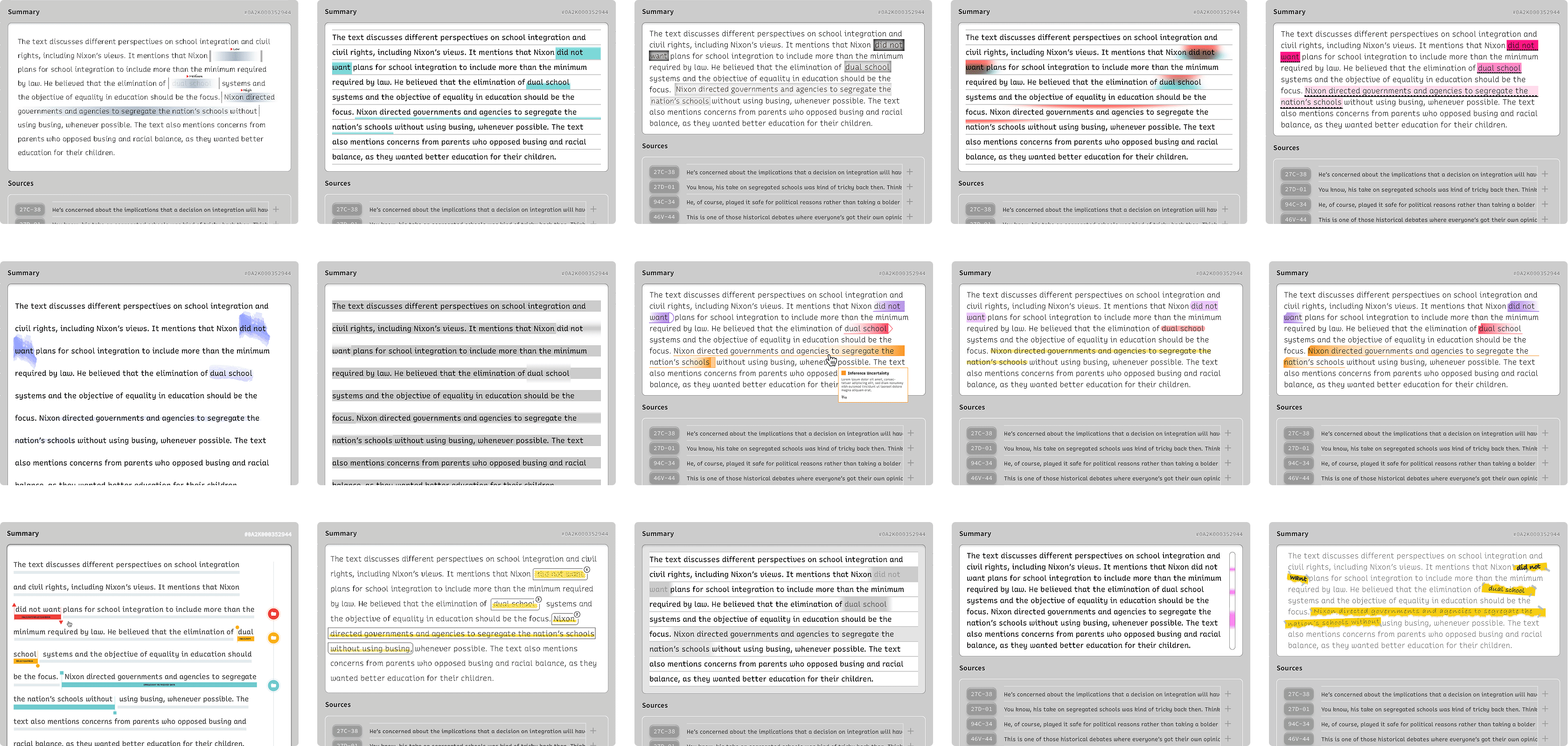

Ideation and Exploration Fig. Sample range of visual studies

Fig. Sample range of visual studies

I worked closely with K. Baidoo at this stage to create a series of visual studies that convey distinct levels of severity of uncertainty, aiming to reveal the full range of visual possibilities. Iterative feedback and prompts such as, “Design the most ridiculous visualizations you can imagine” encouraged creative exploration, resulting in approximately 150 total studies.

Fig. Sample range of visual studies

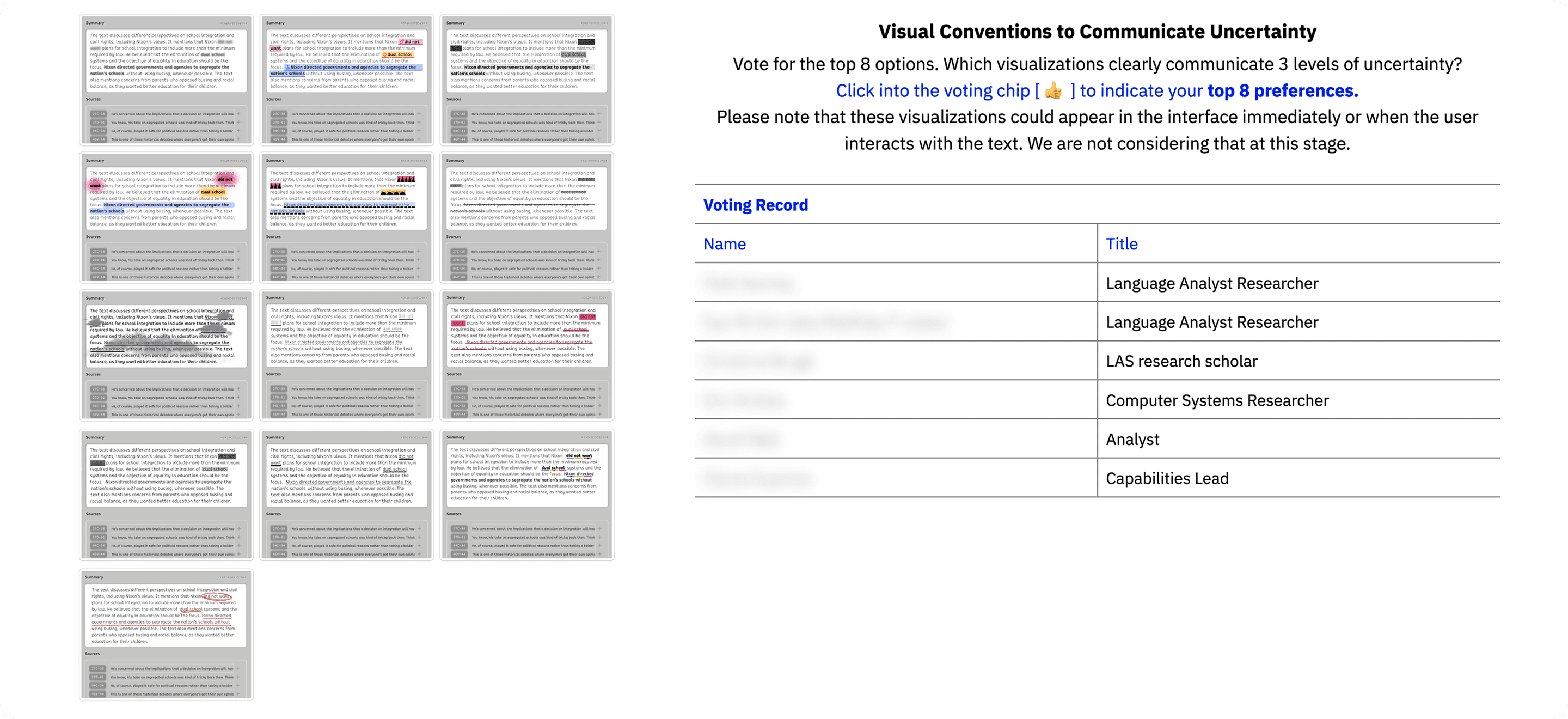

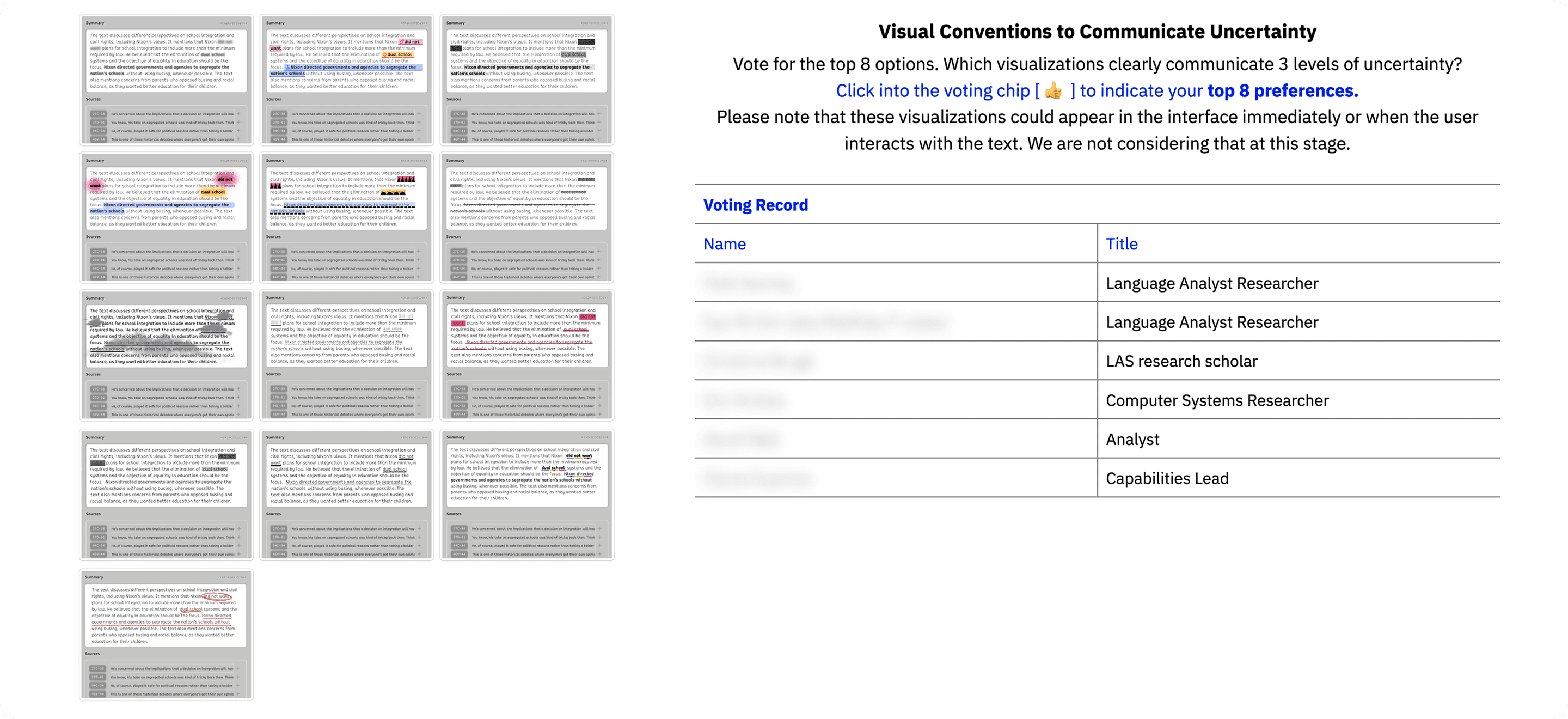

Fig. Sample range of visual studiesRefinement and Implementation Fig. Analyst voting record for refined conventions

Fig. Analyst voting record for refined conventions

We received ongoing feedback from both the PIs and the LAS team, categorizing our final studies by meaning-type and applying emergent criteria to identify the most effective visualizations.We received feedback throughout the process, presenting a curated collection of studies to the LAS team and facilitating a discussion to determine the most promising set of proposed conventions.

Analyst insights revealed a preference for simple and intuitive visualizations such as strikethrough (rejecting content) and transparency (reducing claims). The final eight conventions were optimized for implementation and tested for WCAG/508 contrast compliance.

Fig. Analyst voting record for refined conventions

Fig. Analyst voting record for refined conventionsPart 2: Speculative InterfacesMoving beyond the traditional dashboard format, K. Baidoo and I considered how a more fluid interface could further leverage AI capabilities to support appropriate levels of trust.

Persona: Sloane The Scanner

Sloane is a full performance U.S. intelligence language analyst assigned to monitor and investigate the fictional country of Kobia and its president, Rysz Nicolau. Her primary responsibility is to triage large volumes of speech communications and identify information that answers intelligence requirements regarding Kobian leadership’s plans and intentions. Sloane struggles to gauge confidence and uncertainty in elements of AI-generated summaries of intelligence traffic. This challenge adds significant time to the verification process and discourages her from integrating these tools into her workflow.

We sketched out novel interface ideas and iterated on concepts based on a series of prompts related to trust calibration.

- How might the interface utilize query recommendations, nudging, verification, and source investigation to support trust calibration?

- How might a GenUI interface respond to the needs of specific users, customers, or storylines to calibrate trust between AI agents and users?

- How might interacting with multiple agents — an analytic agent and an evaluative agent — leverage a user’s concept of expertise to build an accurate mental model of AI capabilities?

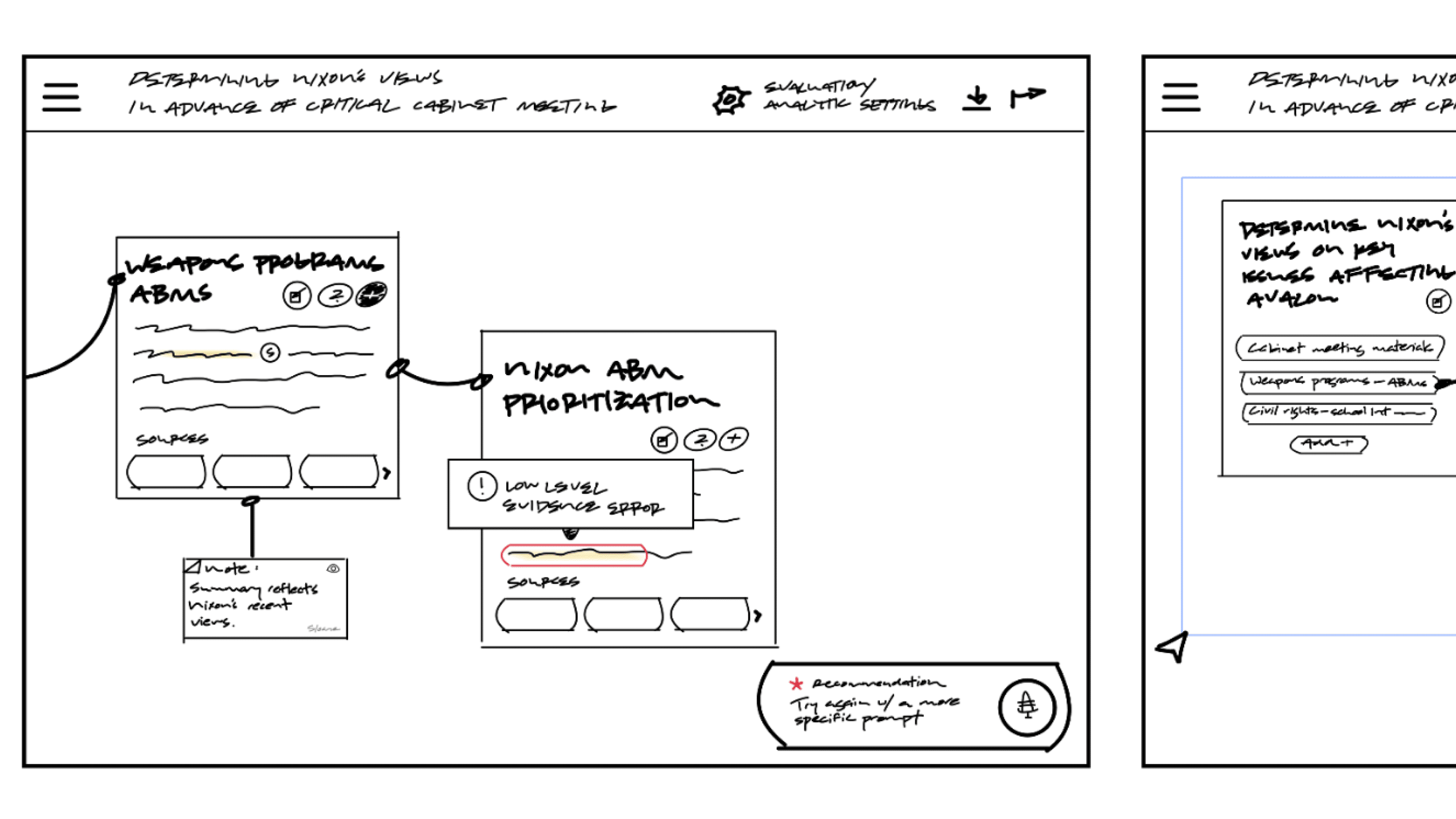

After several rounds of iteration, we narrowed our ideas to three final concepts and developed mid-fidelity wireframes in Figma. Each concept leaned into unique aspects of the original prompts and our individual explorations. During this phase, I independently built the user flow and mid-fidelity wireframes for "History Aperture" and collaborated with K. Baidoo to co-develop "Nested Chat."

Developing History Aperture

The concept was developed in response to the prompt, “How might we utilize query recommendations, nudging, verification, and source investigation via a conversational interface to calibrate trust between agents and users?”

My first iteration was a traditional infinite scroll — the interface would progressively disclose information to the analyst, but it was difficult to imagine how they would retrace their steps or organize insights.

After receiving feedback on user navigation and interaction patterns — specifically distinguishing the querying process from source verification — I reimagined the querying process within a fluid interface that leverages AI to organize and connect queries by topic.

The primary consideration at this stage was how the interface would manage scale to reduce cognitive load. To address this concern, I developed a “filing” system that automatically collapses and collates sub-explorations. Analysts can pin specific nodes for quick reference or query the system to reveal certain patterns.

The querying process is reflected in an interactive query visualization that dynamically updates as the analyst searches for and verifies information. I originally envisioned this as a taxonomy that broke topics into smaller sub-explorations, but I realized it risked creating a visual hierarchy that might mislead the analyst to believe their first query was the most important.

The final iteration combines the fluid node system with the interactive query visualization, allowing the analyst to jump in and out of different parts of their querying process. The system can also nudge the analyst to revisit past content, revealing key insights and facilitating deeper exploration.

High-Fidelity Prototypes

Once the mid-fidelity concepts and task flows were refined and approved, we worked together to build out high-fidelity prototypes, animating key interactions. These final prototypes envisioned how interface design might support trust calibration within human-machine teams in the future.

Watch the scenario videos and view the detailed project report and deliverables at the link below.

Project Site

Reflection and Impact

As a process of exploration and discovery, several compelling questions emerged that would be worth additional study. For example, if given the opportunity, I would want to know how different types of uncertainty might determine how analysts react and what actions they might take to validate information.

On a personal level, this project taught me to think more divergently within a complex and abstract problem space, engaging in multiple processes of rapid iteration to push the boundaries of contemporary interface design. I particularly enjoyed working on the History Aperture concept because it allowed me to explore different methods of representing the information gathering process, moving beyond traditional linear formats to challenge how users currently navigate data. Collaborating on a multidisciplinary team also deepened my appreciation of the unique expertise each member offers and helped me gain a richer understanding of analyst needs and challenges from a human-machine teaming perspective.In addition, I had the opportunity to share this project at the annual LAS Research Symposium and am honored to be collaborating with government stakeholders to improve the interpretability of speaker model confidence, building upon the work completed during this project.

AcknowledgementsThis project reflects a collaboration between Graphic & Experience Design at North Carolina State University and the Laboratory for Analytic Sciences. Participating students are from the Master of Graphic & Experience Design program (in the Department of Graphic Design & Industrial Design, College of Design) and the PhD in Design program. Participants from the Laboratory for Analytic Sciences have technical expertise in language analysis, LLM technology, and psychology.This material is based upon work done, in whole or in part, in coordination with the Department of Defense (DoD). Any opinions, findings, conclusions, or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the DoD and/or any agency or entity of the United States Government.